Machine learning for Optimizing Workflow in the Operating Room (MLOR)

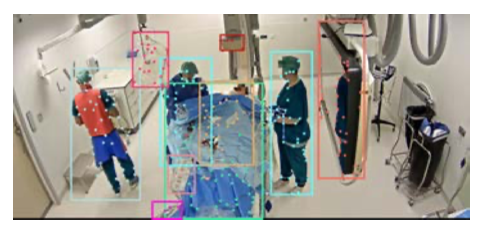

Workflow analysis aims to improve efficiency and safety in operating rooms by analyzing surgical processes and providing feedback or support. In this project, we develop algorithms that make and evaluate observations rather than humanexperts. Object detection and person tracking in this scenario are very challenging because of occlusion. We explore information fusion from multi-view images to tackle the occlusion challenge. We mount five cameras from different angles in a Catheterization Laboratory (CathLab) to observe and analyze Cardiac Angiogram procedures. To automate the classification of workflow and personnel activities, we propose a pipeline that first automates the camera calibration of the 5-camera network then detects locations of medical equipment and tracks personnel activities. Our automatic camera calibration framework in the CathLab relies on Scaled-YOLOv4 to detect fixed objects and uses an automatic model based on artificial neural networks to extract selected key-point features from each image frame. Then point correspondences between the image frame and the 3D coordinates are used to compute one calibration set. As for object detection, we propose an object detection algorithm based on Scaled-YOLOv4 with an auxiliary Dice Loss. Considering that scaled-YOLOv4 is tailored for datasets of a single view without taking advantage of the information contained in multiple views, we also design a filter following the object detection algorithm to refine the bounding boxes of objects by considering detection results from different cameras.

Project data

| Starting date: | September 2021 |

|---|---|

| Closing date: | November -0001 |

| Contact: | Justin Dauwels |