MSc thesis project proposal

Transformer-based World Models for continuous actions environments

Reinforcement learning has revolutionized the way computers tackle individual tasks, achieving remarkable feats such as outperforming humans in complex games like Go and Dota [1]. At the forefront of this advancement is Deep Reinforcement Learning (RL), which has emerged as the primary approach for crafting capable agents in demanding scenarios. A significant breakthrough in this area has been the advent of World Models (WMs). These models have demonstrated exceptional prowess across various gaming genres, including arcade, real-time strategy, board games, and those involving imperfect information, such as Minecraft [2]. Despite their successes, these methods are often critiqued for their notably low sample efficiency.

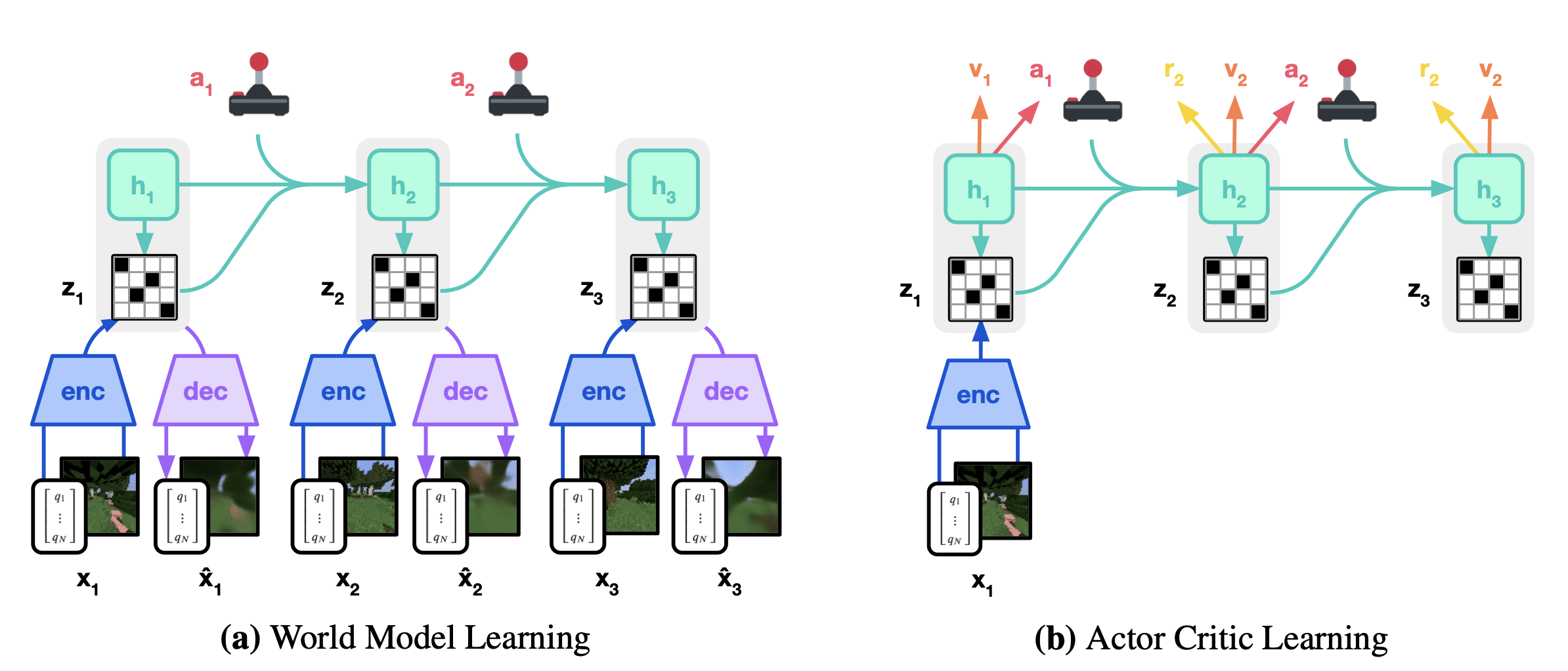

In response to this challenge, the past year has seen the proposal of various transformer-based World Models. However, these models predominantly utilize discrete representations, limiting their application primarily to environments with discrete actions. This thesis proposes to investigate and develop methods that extend the application of such models to continuous environments, examples of which include AntMaze and the DeepMind Control Suite. The student will explore and implement diverse techniques to transform continuous actions into discrete tokens. This exploration will include a comparison of our baseline model, a World Model that leverages TransformerXL [3] for modeling world dynamics, against leading state-of-the-art models such as DreamerV3 [2], TWM, Groot, and others.

Throughout this thesis, the student will receive comprehensive, step-by-step guidance. To facilitate a robust starting point for this research, we provide access to a well-maintained codebase, available at https://github.com/Cmeo97/iris . This resource is intended to serve as a foundational platform, supporting the student in navigating and contributing to this cutting-edge field of study.

Supervisors:

Cristian Meo (c.meo@tudelft.nl )

Justin Dauwels (j.h.g.dauwels@tudelft.nl)

[1] Transformers are Sample-Efficient World Models, V. Micheli et al. 2023 https://arxiv.org/abs/2209.00588

[2] Mastering Diverse Domains through World Models, D. Hafner et al. 2023, https://danijar.com/project/dreamerv3/

[3] Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context,

Z. Dai et al. 2019, https://arxiv.org/abs/1901.02860

Contact

dr.ir. Justin Dauwels

Signal Processing Systems Group

Department of Microelectronics

Last modified: 2023-12-22